You Don’t Have to Be a Webmaster to Use Google’s Webmaster Tools

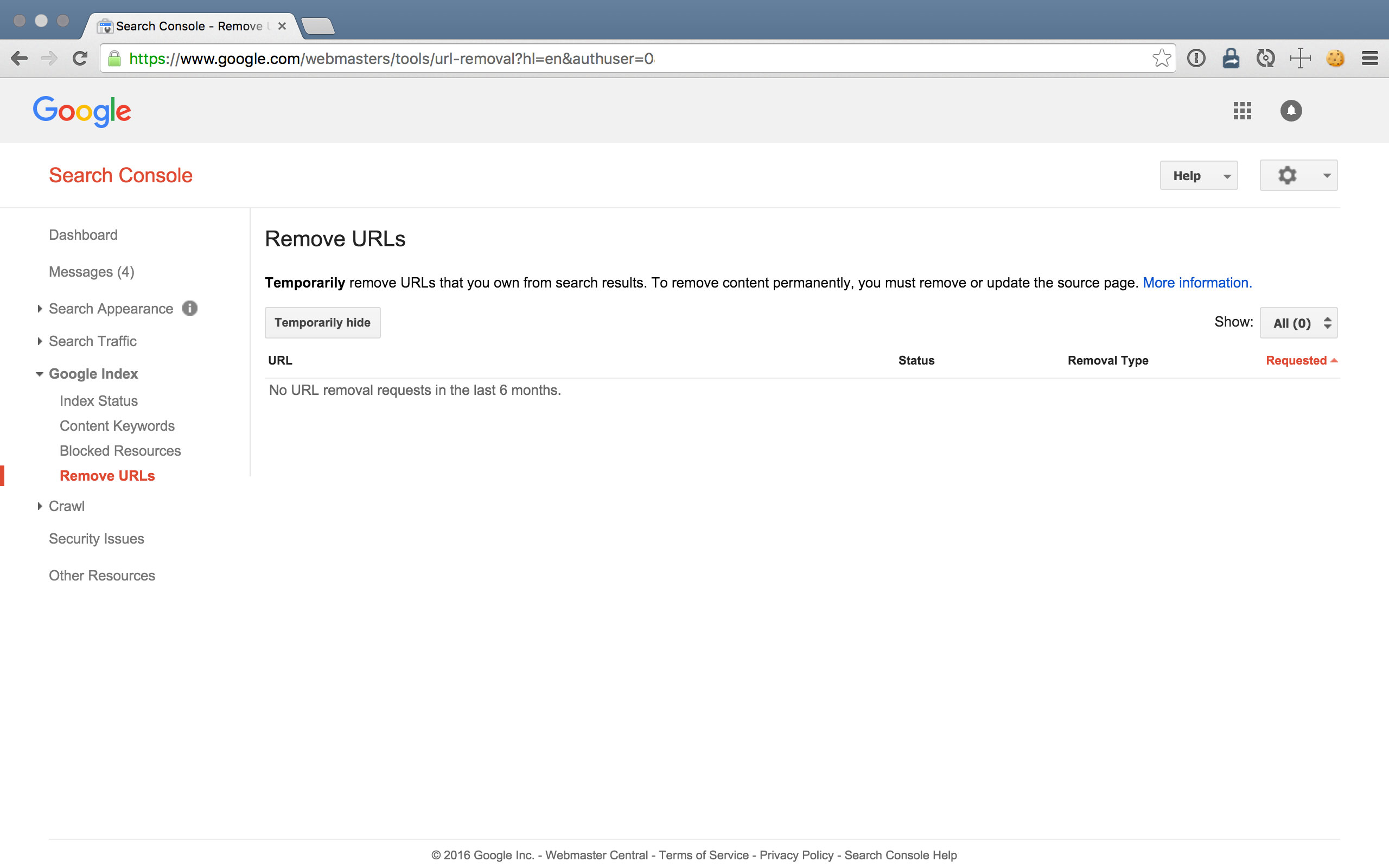

17. Remove URLs From The SERPs, Temporarily

There are many ways to keep pages on your site from finding their way into SERPs (e.g. noindex, no-follow, sitemap exclusion, robots.txt directives, server authentication, etc.). But sometimes content just sneaks through.

Using the Remove URLs tool, you can (temporarily) remove pages from Google’s index on this page. If you don’t implement any of the aforementioned methods, however, the removal won’t last.

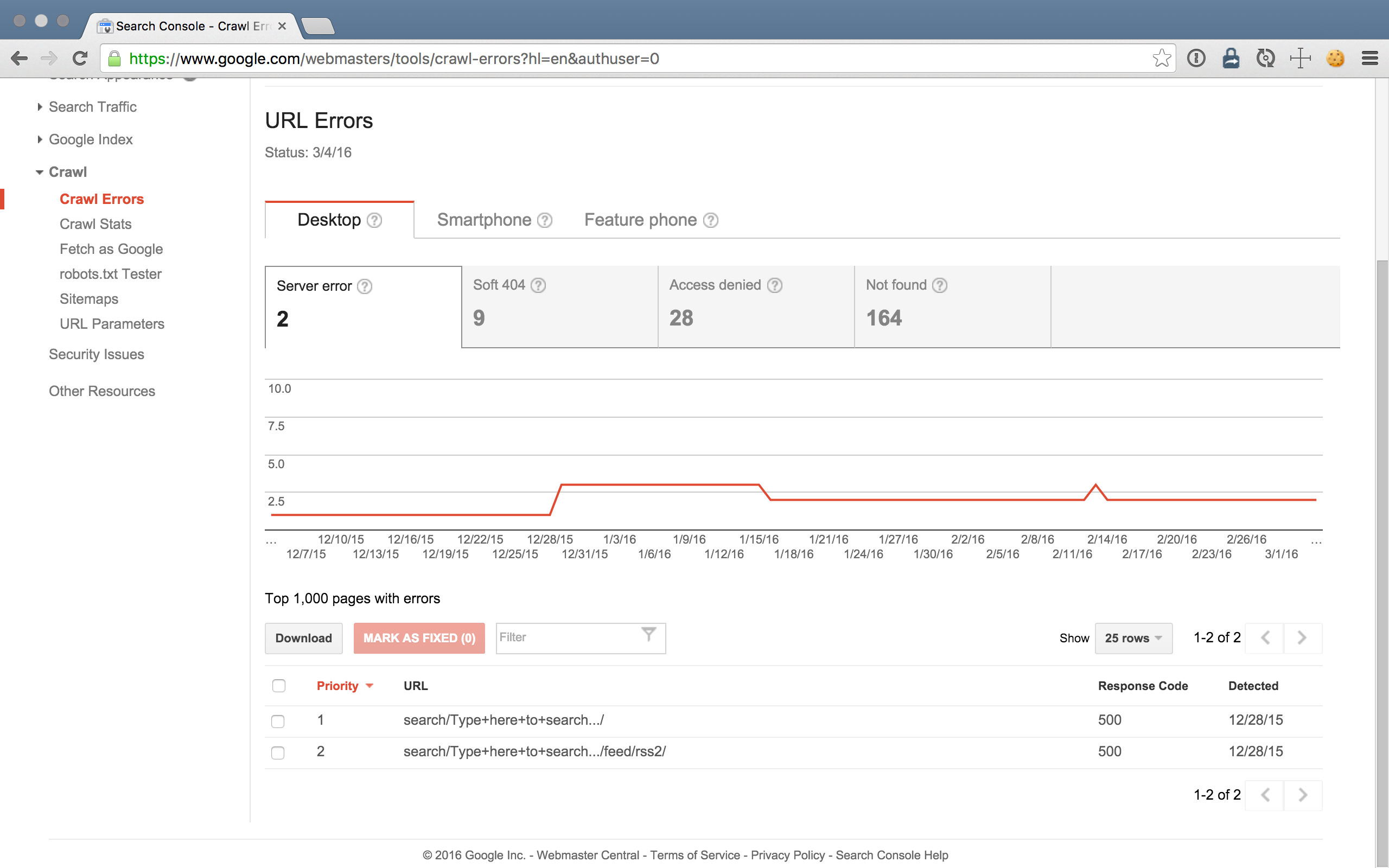

18. Crawl Errors On Your Website

In the Crawl Errors report, you can view and export 404 errors (caused by broken links) detected by GoogleBot (from which, hopefully, you’ll then fix and/or create 301 redirects to resolve).

Fixing 404 errors (especially those that are linked from other websites) is a great way to build more authority for your site and its pages therein.

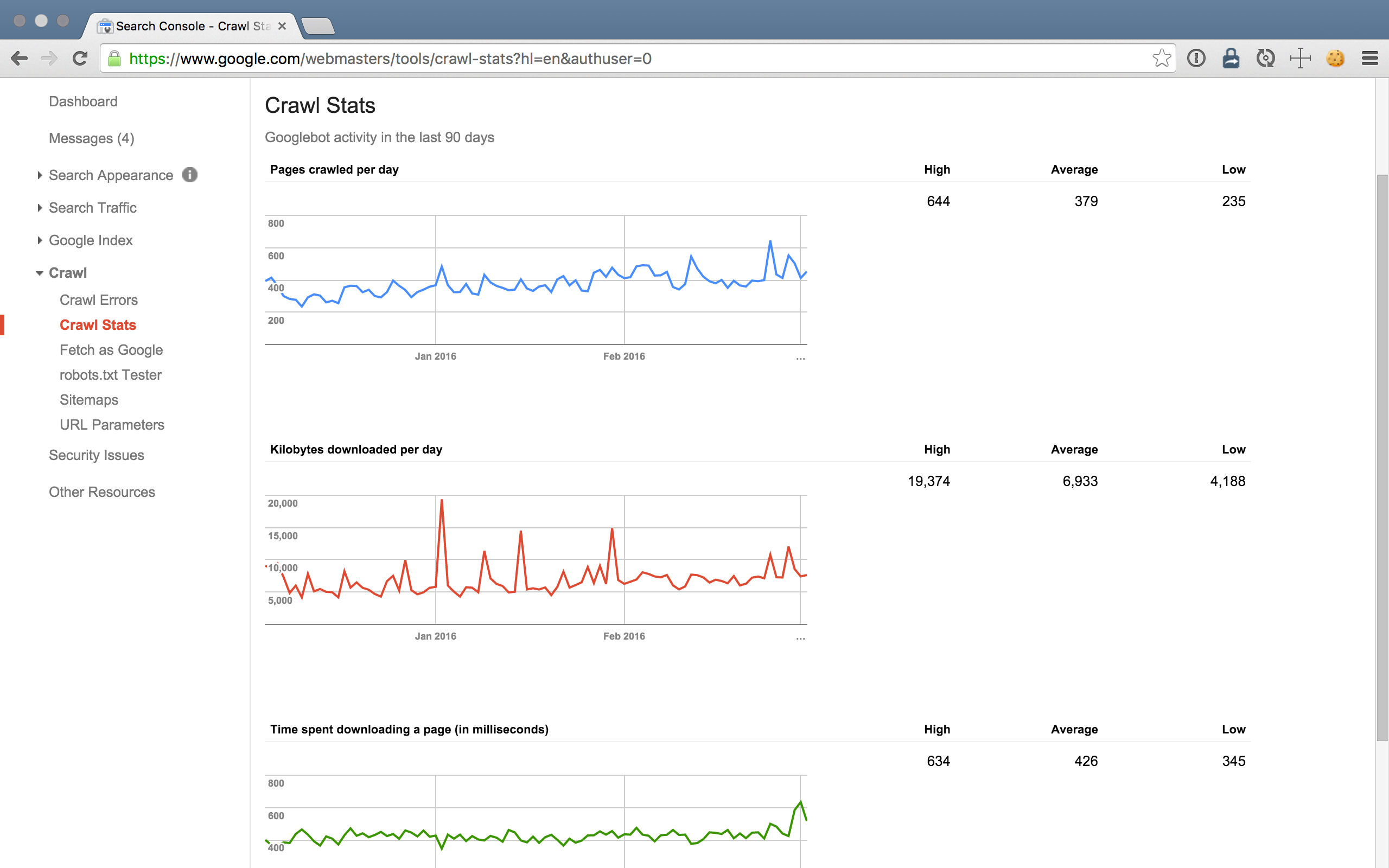

19. Crawl Stats About Your Website

The Crawl Stats report allows you to see how often GoogleBot crawls your site. It’s helpful to put some context behind all that data.

To read more about crawl optimization and why you’re winning if your pages with lower PageRank are frequently crawled, check out AJ Kohn’s post on crawl optimization.

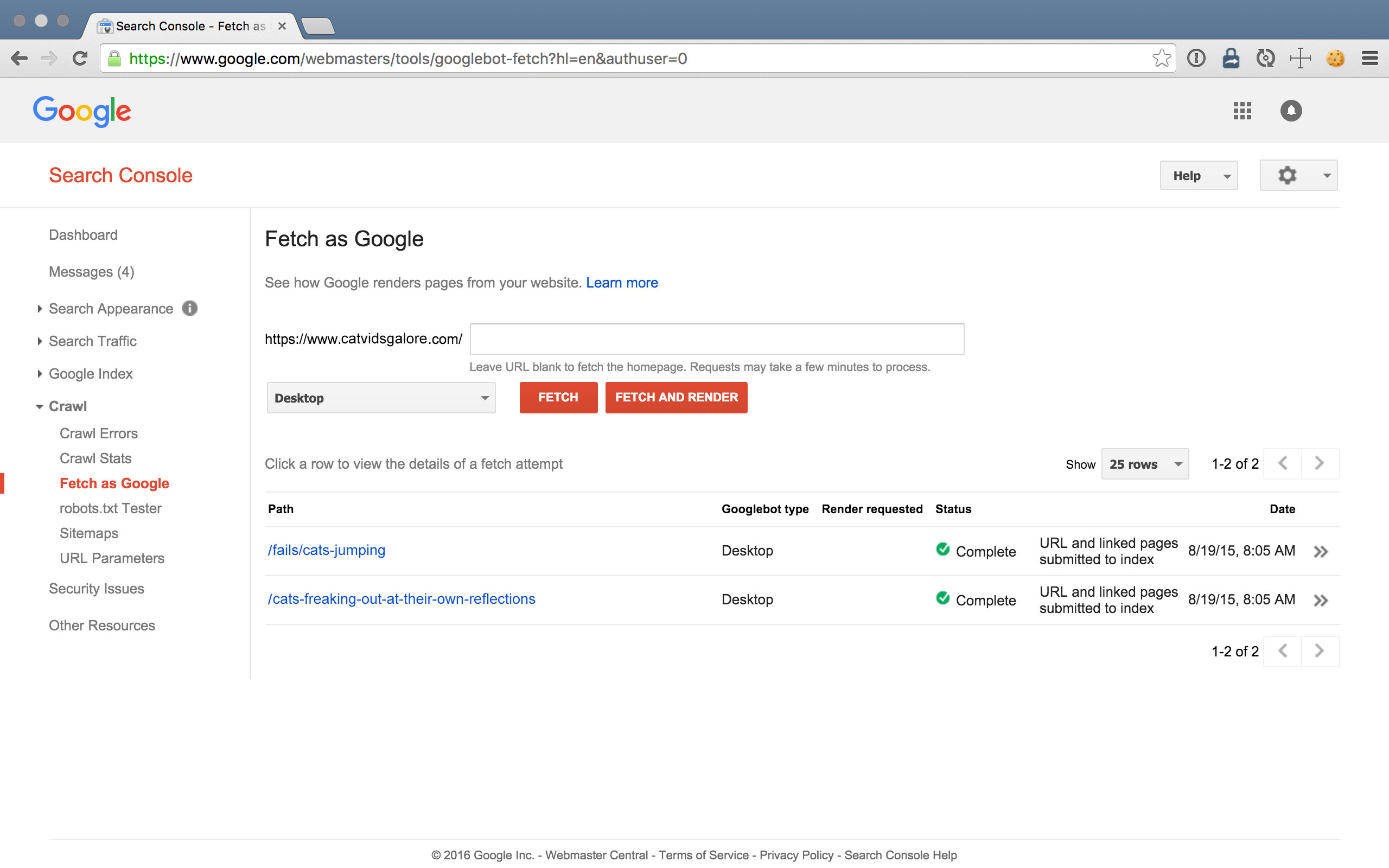

20. Fetch as Google To Test Crawlability

The Fetch As Google tool lets you run a test to ensure GoogleBot can effectively crawl particular pages on your site. Additionally, you can render the pages as GoogleBot to view which elements that might be seen or missed by bots.

Once you fetch a page, you can submit it (and its direct links) to Google’s index, so you don’t have to wait for Google to crawl your site on its own.

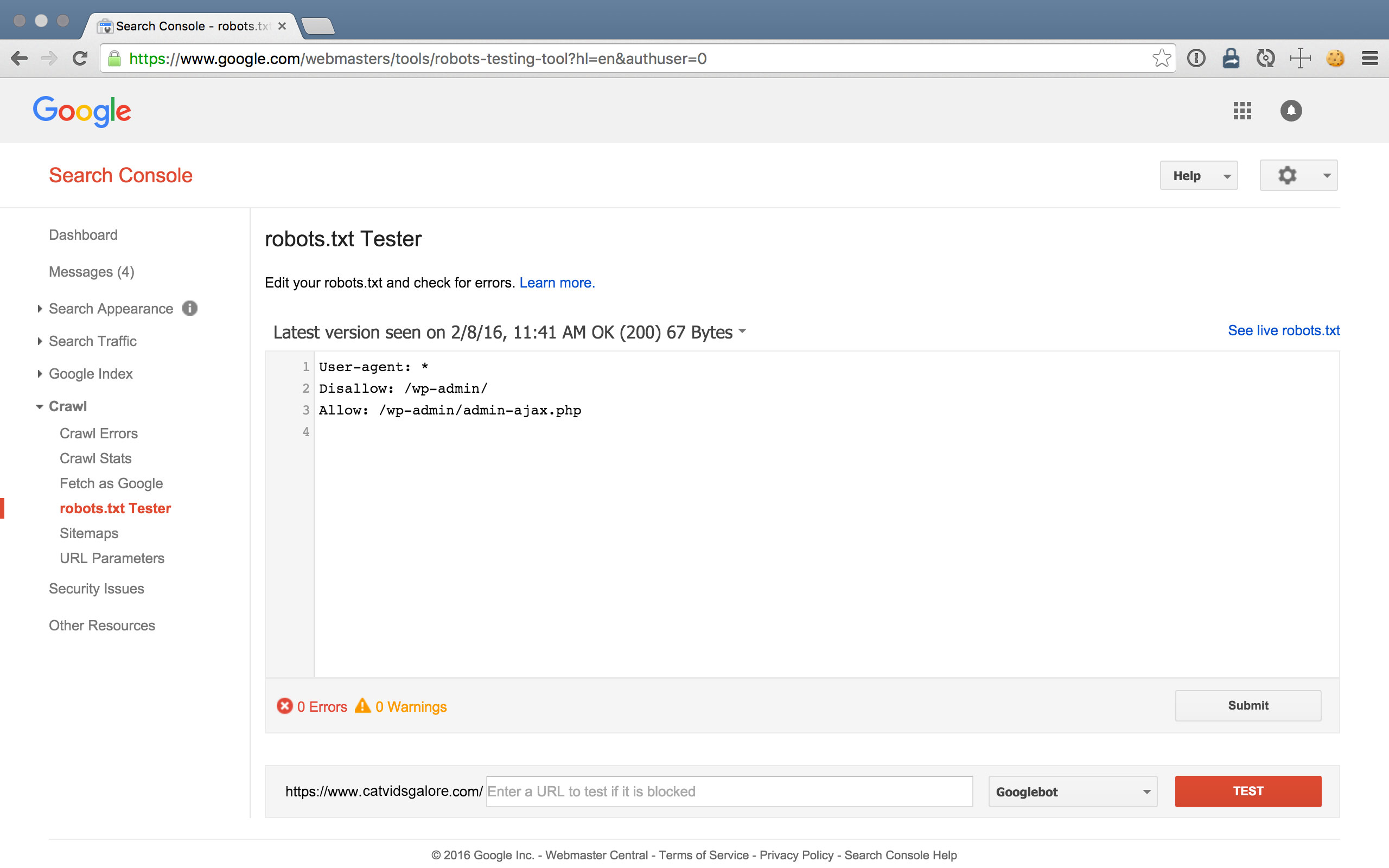

21. Robots.txt Tester For Blocking Crawlers

In addition to noindex and no-follow tags, you can instruct GoogleBot to not index certain site content via a simple text file that resides in your site’s root directory and that follows the syntax described at http://www.robotstxt.org/. It’s a file that explicitly speaks to… you guessed it: robots.

In the robots.txt Tester section of Search Console, you can view the robots.txt file that GoogleBot discovered on your site and you can enter a URL into the test to see if GoogleBot will crawl it or not based on your robots.txt file directives.

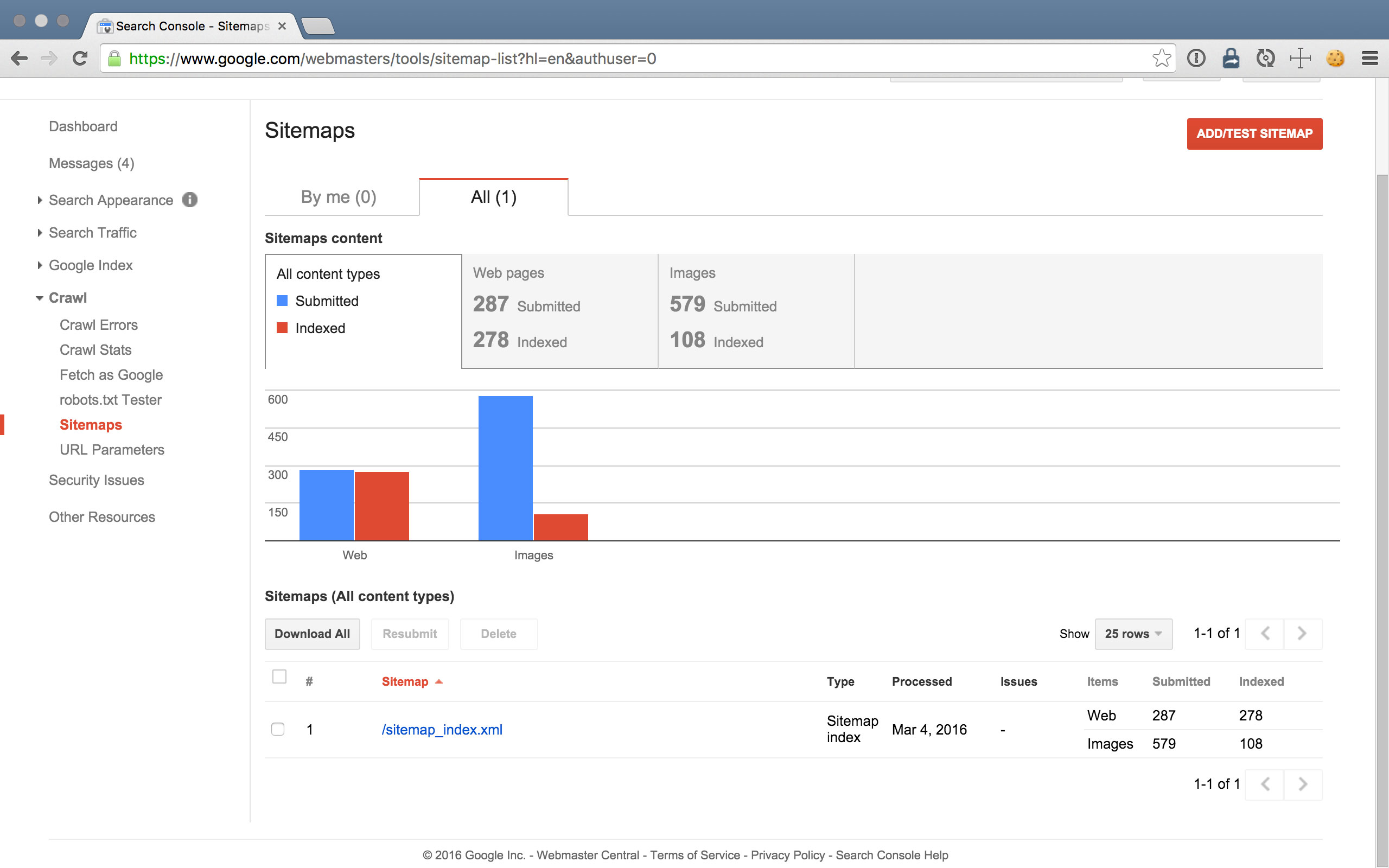

22. Sitemaps You Submitted & Indexed

We’ve reviewed a lot of sections about blocking or removing pages from Google’s index, but what about manually adding pages?

You can submit sitemap files and view sitemaps you’ve already submitted in the Sitemaps section of Search Console. Sitemaps are XML files that you can write or generate using, for example, the Yoast WordPress plugin.

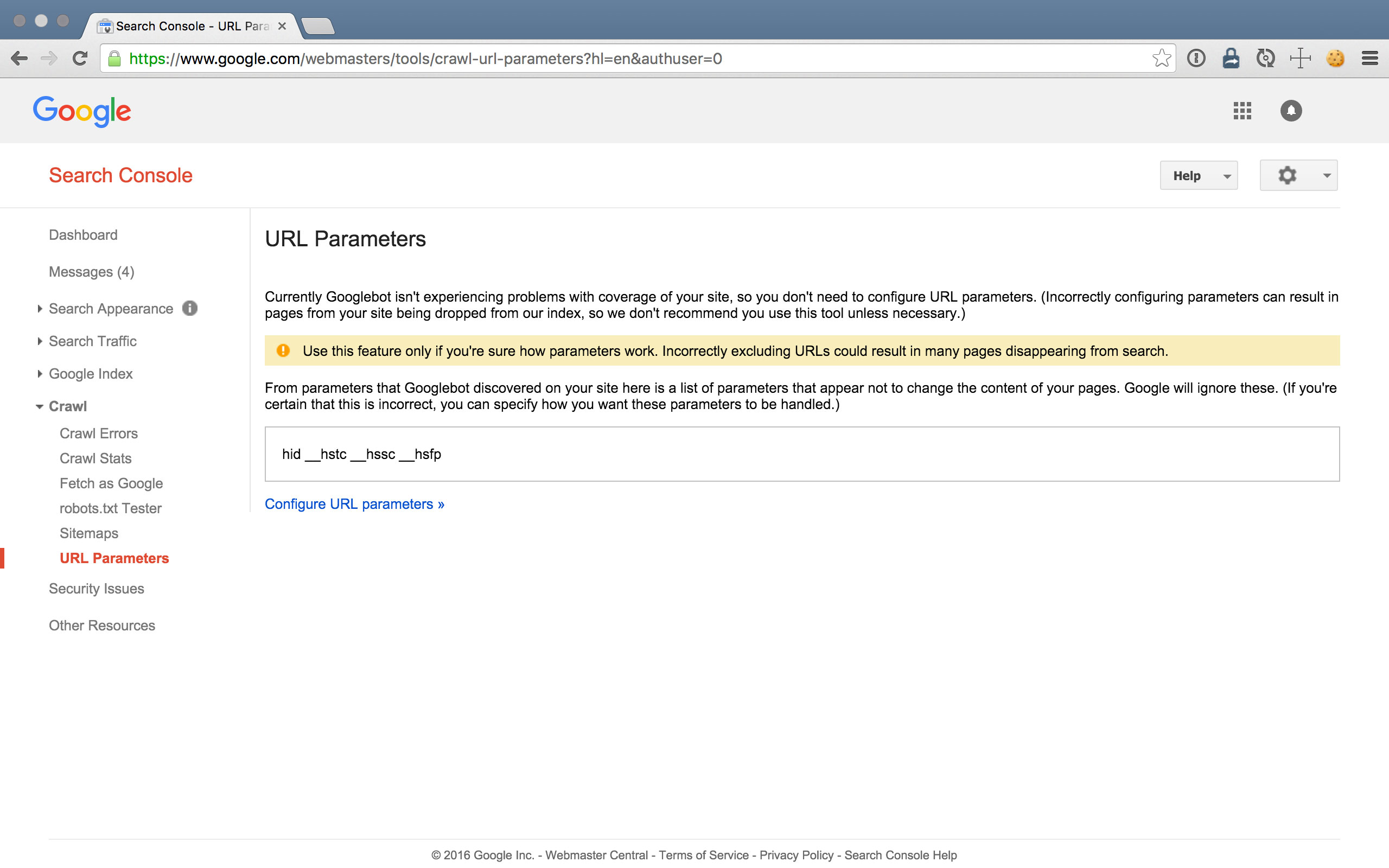

23. URL Parameters For Cleaner Data

Developers often append “GET parameters” to URLs, (e.g. example.com?utm_source=google&foo=bar), which can alter the content on the page. Sometimes it’s helpful to instruct GoogleBot what these parameters do to the page, or sometimes we don’t want GoogleBot to index pages with certain URL parameters or certain URL parameter values. The URL Parameters section in Search Console is the exact spot to do that.

24. Security Issues With Your Site’s Content

Search Console’s Security Issues report will recognize most security issues with your site’s content that may prevent the effectiveness of your search listing.

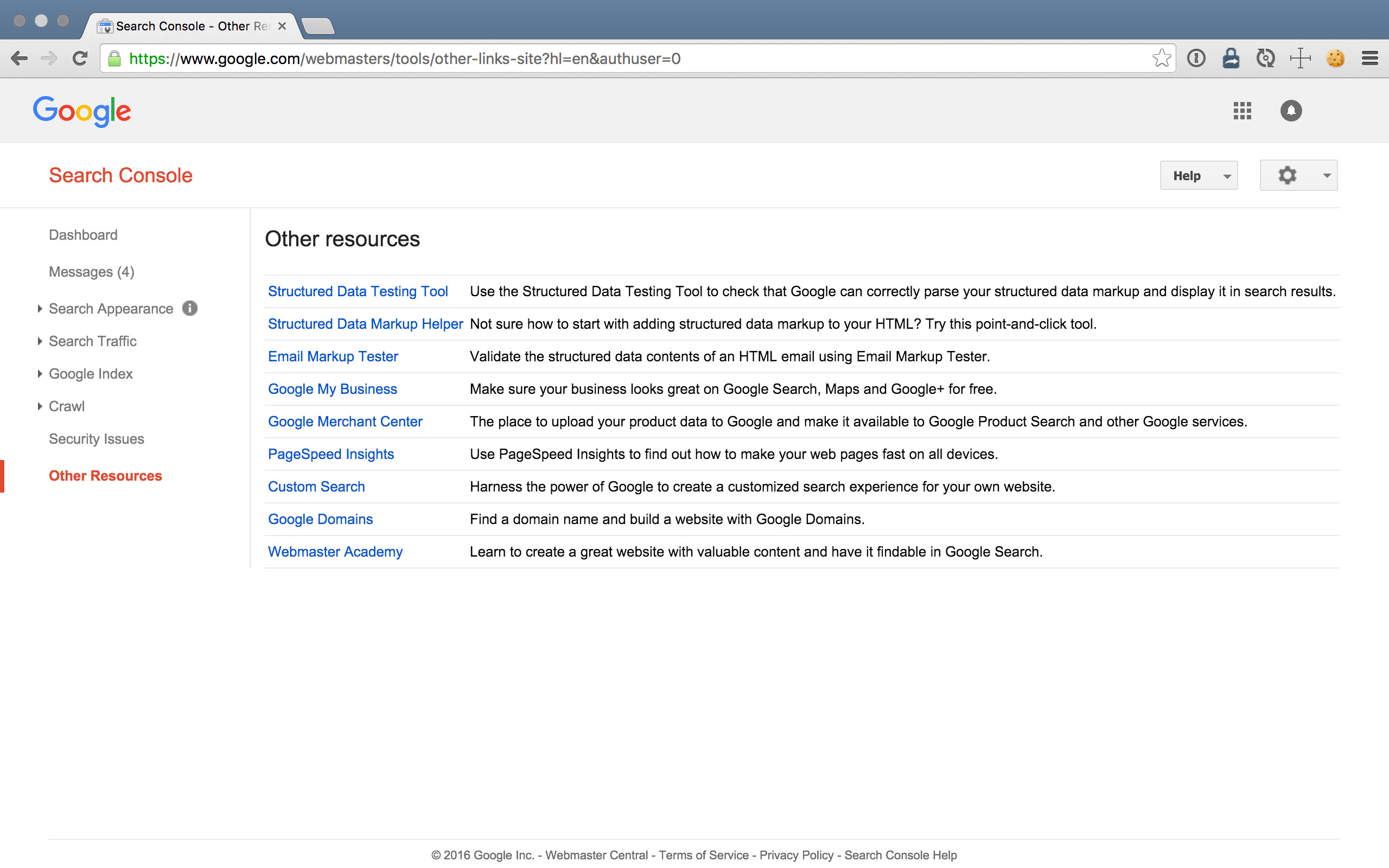

25. Other Resources

In the Other Resources section, Search Console provides several insightful articles for further maintaining your site’s presence in Google’s search results.

Conclusion

In conclusion, use Search Console! It’s free, robust and offered by Google— if there’s one expert source/tool for SEO, it’s going to be the #1 “SE” in the world…

But remember, technical SEO efforts only begin with Search Console. Here are just a few other strategies we at WebMechanix commonly deploy:

- It’s important to create helpful (and branded) 400-level and 500-level error pages (learn more about all HTTP status codes here).

- You should always test and optimize page size and load speed (Tools: GTMetrix, Pingdom).

- Optimize for mobile and other slow-speed situations (see Chrome DevTools’ built-in device emulator and tools like Google’s mobile-friendly test).

- Comb your site for (and remove) duplicate page content, as that’s a big SEO no-no.

- Install a (non-self-signed) SSL certificate, which gives you a small boost on SERPs (free SSLs).

- Before launching a new site or a redesign, go over all the items on this excellent Web Dev Checklist.

Most newsletters suck...

So while we technically have to call this a daily newsletter so people know what it is, it's anything but.

You won't find any 'industry standards' or 'guru best practices' here - only the real stuff that actually moves the needle.