Unlocking the Secret Why Only 20% of Tech SEO Matters

Technical SEO (Tech SEO) stands as a crucial yet often overlooked cornerstone among the four main pillars of SEO, frequently shrouded in misunderstanding and not fully leveraged. Many Tech SEO initiatives hinge on completing exhaustive checklists or audits generated by SEO tools, with SEO professionals dedicating countless hours to addressing each item—only to witness minimal impact on their efforts.

Despite ongoing debates regarding its relevance, the significance of Tech SEO has been underscored by Google Search Advocate John Mueller, who has recently addressed misconceptions about its diminishing importance. In a notable response on X, previously known as Twitter, Mueller emphasized,

“It does not say page experience is somehow ‘retired’ or that people should ignore things like Core Web Vitals or being mobile-friendly. The opposite. It says if you want to be successful with the core ranking systems of Google Search, consider these and other aspects of page experience.”

This statement reinforces the idea that a technically optimized website remains a fundamental component of any robust SEO strategy, yet many SEOs still hesitate to engage deeply with Tech SEO.

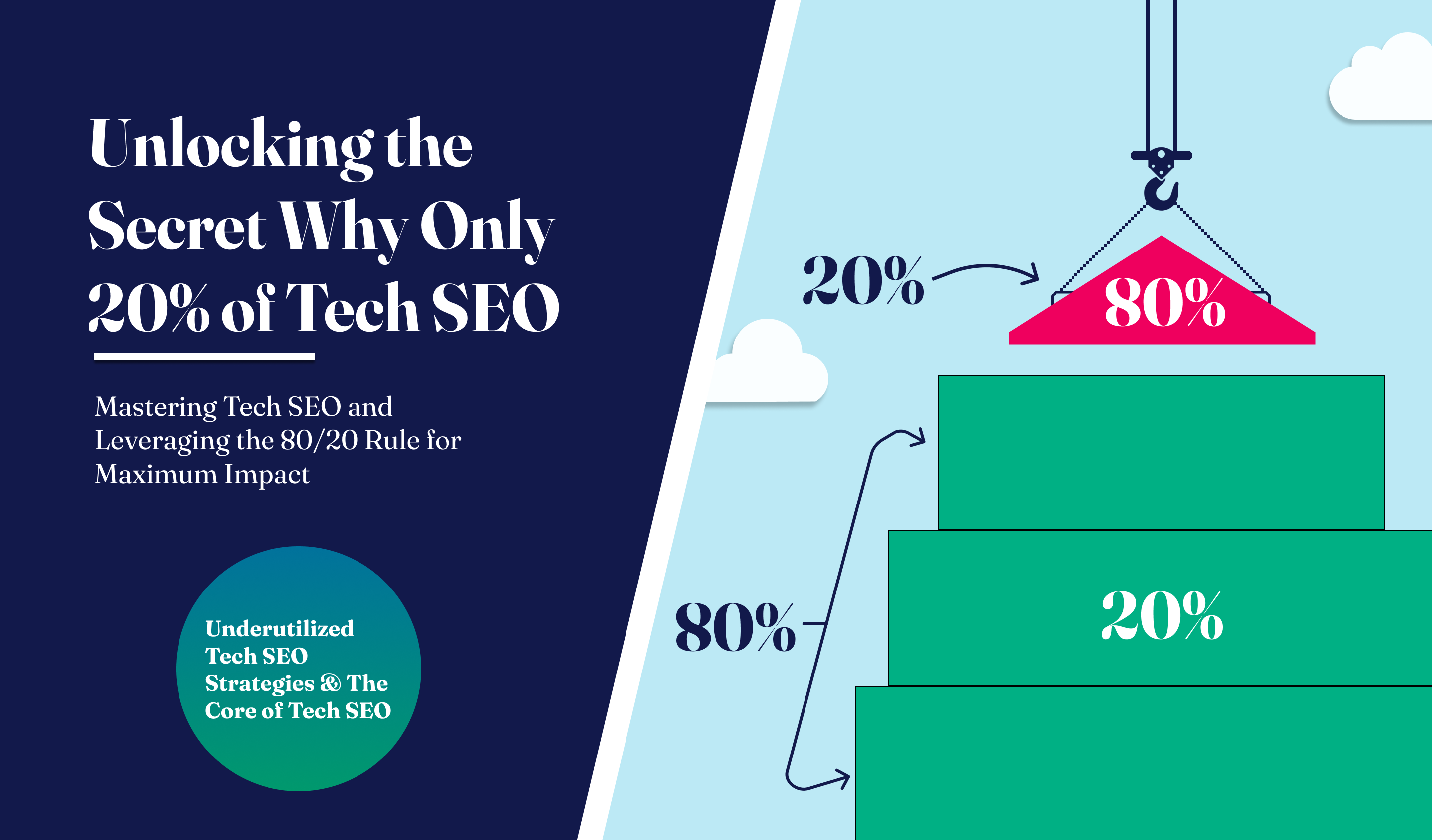

Exploring the variety of Tech SEO tasks reveals significant differences in their impact. This observation brings the 80/20 rule, or Pareto Principle—named after Italian economist Vilfredo Pareto—into the spotlight, suggesting a focused strategy for better results. This principle suggests that by concentrating on the 20% of Tech SEO tasks that yield the most significant results, marketers can achieve 80% of their overall outcomes.

This article aims to dissect which Tech SEO tasks truly drive the majority of results, identify those that might be less impactful, and explore the reasons behind these dynamics.

Distinguishing Between SEO and Tech SEO

Before we delve into Tech SEO systems, strategies, and best practices, it’s crucial to understand how Tech SEO differs from the other pillars of SEO. The four main pillars of SEO are:

- Technical SEO (Tech SEO)

- On-Page SEO

- Off-Page SEO

- Content

The latter three pillars encompass a variety of strategies and practices aimed at boosting a website’s visibility in search engine results pages (SERPs), focusing on aspects other than the technical side. This broad spectrum includes:

- Keyword Research

- Content Creation

- Link Building

- Page Optimization

Each of these elements significantly influences how search engines and, consequently, potential visitors perceive a website.

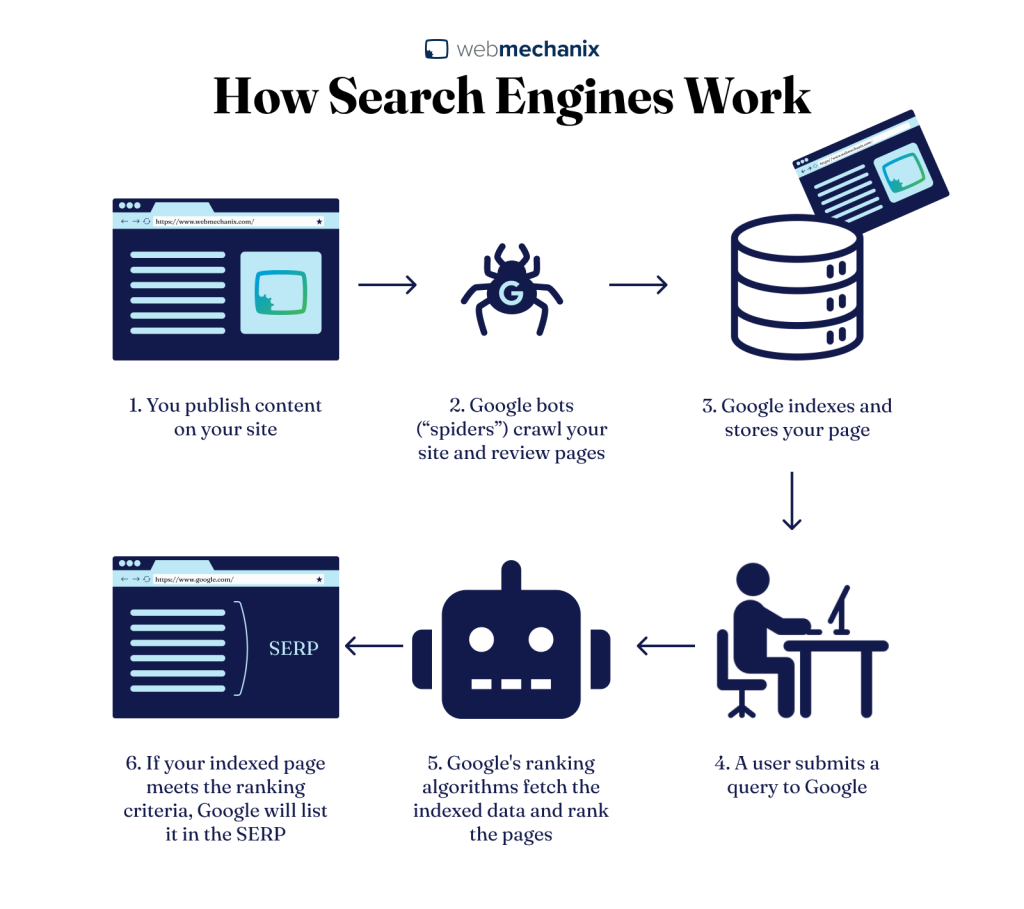

Conversely, Tech SEO is dedicated to optimizing the technical elements essential for a site’s efficient crawling and indexing by search engines.

Tech SEO lays the groundwork for all other SEO efforts. Without a website that’s technically sound, the most compelling content and robust backlink profiles might not reach their full potential in SERPs visibility. Thus, Tech SEO isn’t merely about addressing issues. It’s about proactively preparing a website for success.

Despite its critical importance, many perceive Tech SEO as daunting or overly complex. Recognizing this, our approach aims to demystify Tech SEO, breaking down seemingly complex tasks into simpler, more manageable actions. By doing so, we intend to show that with the right guidance and understanding, mastering Tech SEO is far less intimidating than it appears.

Identifying The Vital 20% for Tech SEO Focus and Their Best Practices

Leveraging our extensive 15-year history in the SEO landscape, we at WebMechanix have combined our deep-seated knowledge with recent insights from Google Core Algorithm Updates to distill the essence of the most impactful technical strategies.

This blend of historical expertise and up-to-date algorithm familiarity has empowered us to identify the critical 20% of tasks that drive the majority of results.

Among these pivotal tasks, here are some of the more commonly known ones that are vital to perform:

- Mobile Optimization

- Page Speed (Core Web Vitals)

- HTTPS and Security

- XML Sitemaps

- Robots.txt

- Crawl Errors

- Duplicate Content

- Website Architecture

- URL Structure

- Broken Links

- Schema Markup

Focusing on these areas ensures that your SEO efforts are not just comprehensive but also impactful. It’s important to remember, however, that while these tasks are among the most recognized, they do not exclusively define the most crucial aspects of the 20%.

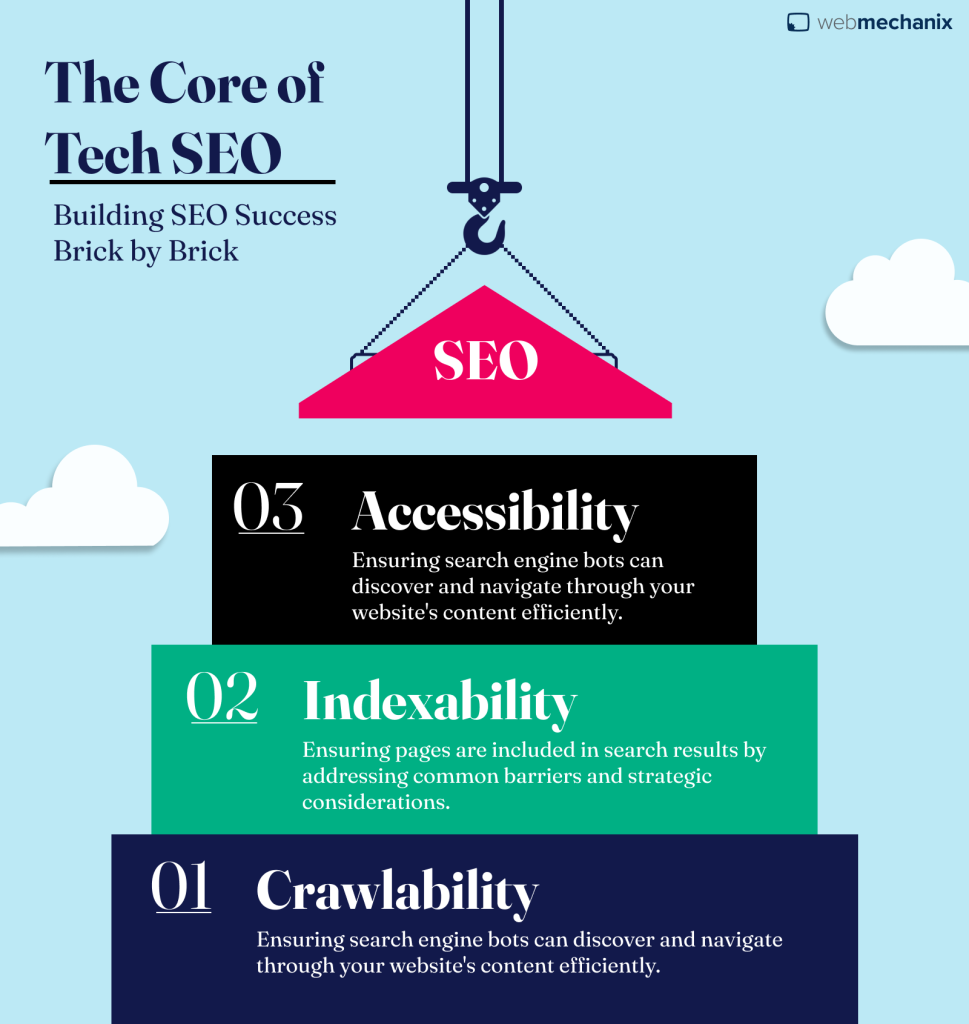

The Core of Tech SEO

For SEO strategies to be impactful, the foundational elements of Tech SEO must be addressed first. These core tasks form the bedrock of a website’s ability to rank and attract traffic:

- Crawlability

- Indexability

- Accessibility

Understanding the nuances of each of these crucial aspects not only demystifies the complexities of Tech SEO but also ensures the establishment of a solid, technically sound foundation for your website.

Crawlability

Crawlability stands as the cornerstone of Tech SEO, crucial for ensuring that search engines can discover and navigate your website’s content.

Crawlability refers to the ability of search engine bots to access and navigate through the entire structure of a website, identifying all accessible pages. This capability is essential for a website to be indexed and, subsequently, to appear in the SERPs.

What Crawlability Entails:

- Accessibility to search engine bots through well-structured navigation and sitemaps, allowing for efficient discovery and cataloging of website content.

- Proper use of robots.txt to guide search engines to the content meant to be crawled, specifying which areas of the site should be explored or ignored.

Enhancing the crawlability of your website involves a series of strategic actions aimed at making it easier for search engine bots to discover and navigate through your site’s content.

Here’s how to ensure your website and its pages are optimally crawlable:

- Ensure internal links are logical and functional.

- Regularly update sitemaps for search engines to discover new pages.

In the realm of Tech SEO, the approach to improving crawlability is strategic and data-driven. By analyzing how search engines discover and prioritize pages, Tech SEO aims to refine the crawling process. This involves leveraging analytics on past crawl patterns and frequencies, as well as understanding the triggers that lead to increased search engine crawling.

Indexability

Being crawlable doesn’t guarantee indexation. Indexability refers to the search engine’s ability to include your pages in search results.

Some common barriers to pages that are crawled but not indexed are:

- Poor Content Quality

- Canonical Issues

- Slow Loading Times

- Redirect Chains

- JavaScript Issues

- Server Errors

- Flash or Non-HTML Content

- URL Parameters

There are strategic reasons why an SEO might decide against having certain pages indexed, despite common SEO tools marking such exclusions as critical errors. These reasons include:

- Pages Containing Sensitive Information

- Duplicate Pages for Internal Use

- User-Specific Content

- Archived Content

It’s essential to critically evaluate the necessity of indexing each page, taking into account both the strategic considerations of SEO and the automated assessments provided by SEO tools. This approach ensures that your actions are aligned with your overall SEO goals, rather than being solely guided by tool-generated alerts which may not fully comprehend your site’s specific indexing strategy.

Tech SEO rigorously checks and adjusts key settings to guarantee that appropriate pages are optimally positioned for indexing. This strategic alignment ensures that only the intended pages enhance your website’s visibility in search results.

Ensuring Accessibility

At the heart of our Tech SEO Fundamentals, accessibility, alongside website performance, plays a critical role. In essence, accessibility ensures that URLs on your website are readily displayable and renderable, not just to users but also to search engine bots.

While most crawled and indexed pages are primed for ranking in SERPs, issues can arise if they’re not accessible at the crucial moment search engines attempt to access them.

Maintaining consistent accessibility for both users and bots is paramount. It not only elevates the user experience but also safeguards against potential penalization in search rankings.

Many of the essential methods to ensure your URLs are accessible overlap with the common pivotal tasks to perform:

- HTTPS and Security

- Page Speed (Core Web Vitals)

- Mobile Optimization (This is a new one to accessibility due to a recent Google Core Update)

- Website Architecture

While many tasks associated with crawlability and indexability align with the efficient 80/20 rule of SEO optimization, a substantial portion of accessibility-related tasks may not. This discrepancy exists because search engines generally accept a baseline level of accessibility. Staying above this baseline ensures your site remains functional and competitive in SERPs, but significantly exceeding this standard might not yield proportional returns on investment.

Here, the value of Tech SEO tools becomes apparent. These tools can assess a wide array of factors, from server downtime and HTTP status responses to resource sizes and loading times, with metrics like TTFB, FCP, and LCP providing insights into your site’s performance. For many sites, leveraging tools such as Google’s Lighthouse or Core Web Vitals report is sufficient for monitoring and maintaining essential accessibility standards.

Be mindful of additional accessibility considerations such as:

- Unoptimized Heavy Media Files

- Orphan Pages

- JavaScript Rendering

- Server Performance

- Interstitials and Pop-Ups

Once you’ve addressed the fundamental accessibility concerns, you’ve essentially laid the groundwork as per the core principles of Tech SEO and the practical 80/20 rule. Beyond this point, while there remain impactful tasks that fit within this optimization framework, the majority of websites should already boast a well-functioning platform poised for top rankings.

Exploring Underutilized Tech SEO Strategies

Diving deeper into the realm of Tech SEO reveals a host of strategies that, while not always at the forefront of SEO discussions, hold substantial potential to elevate your site’s search engine performance within the 80/20 rule. These underexplored tactics can provide competitive advantages and are worth integrating into your SEO arsenal.

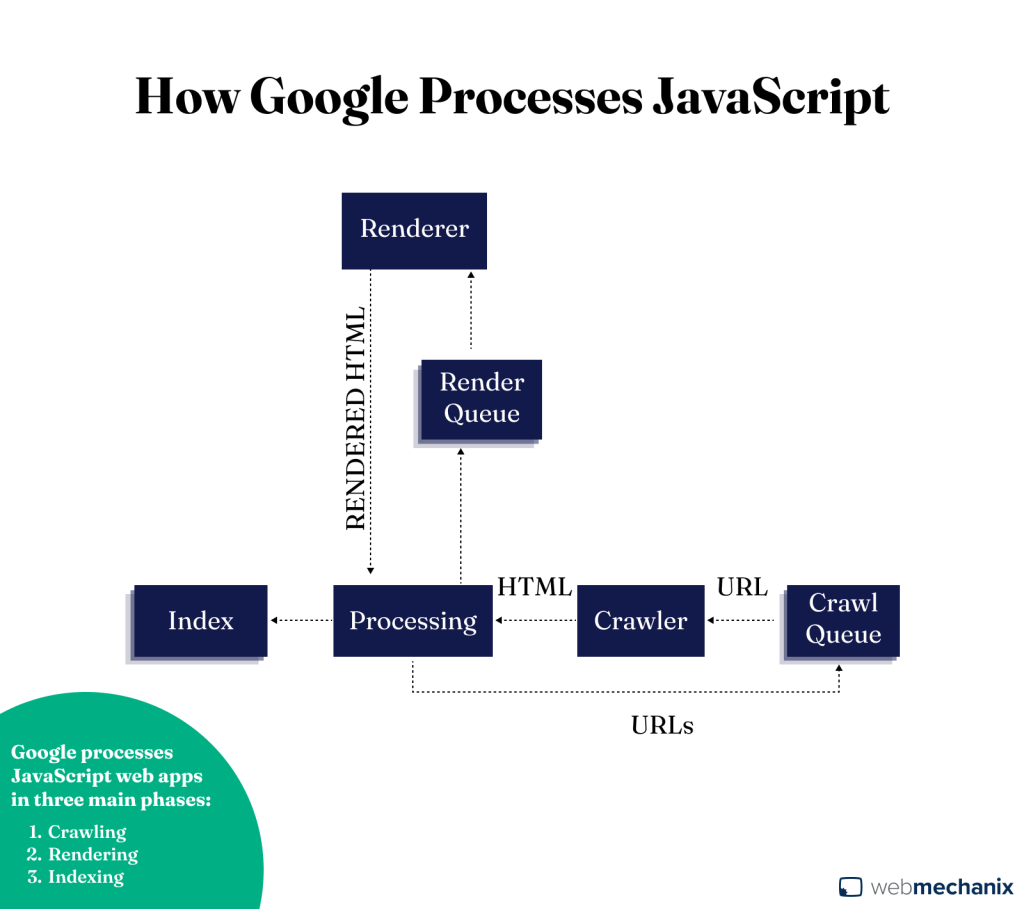

JavaScript and SEO

JavaScript SEO is an essential facet of Tech SEO aimed at optimizing JavaScript-heavy websites for better crawlability, indexing, and search engine visibility. The objective is to ensure these dynamic and interactive websites not only get discovered but also achieve higher rankings in search engine results.

Contrary to some misconceptions, JavaScript is neither detrimental to SEO nor inherently problematic. It represents a shift from traditional web development practices, introducing a learning curve for SEO professionals accustomed to static HTML.

Leveraging server-side rendering (SSR) frameworks (e.g., Next.js for React, Nuxt.js for Vue) is pivotal. These frameworks pre-render HTML on the server, improving crawlability and page speed. Additionally, SEO-friendly modules like React Helmet enhance the management of SEO elements:

- SSR Frameworks: Enhance crawlability and speed.

- SEO Modules: Simplify SEO element management.

JavaScript’s dynamic features enhance user experience but can affect site performance. Considerations include:

- Development Ease vs. Performance: JavaScript facilitates complex features but may require optimizations for speed.

- Selective Use: Employ JavaScript where it adds significant value, and consider alternative solutions for simpler tasks.

Some key strategies for JavaScript SEO are:

- Enhance Interactivity: Use JavaScript for meaningful user engagement improvements.

- Optimize Loading Times: Implement SSR and lazy loading to minimize performance impacts.

- Adapt SEO Practices: Apply traditional SEO techniques within a JavaScript framework, ensuring content is both accessible and engaging.

By integrating these focused strategies, websites can maintain the interactive benefits of JavaScript while ensuring optimal SEO performance.

This approach supports a balance between providing a rich user experience and meeting search engine requirements for visibility and ranking.

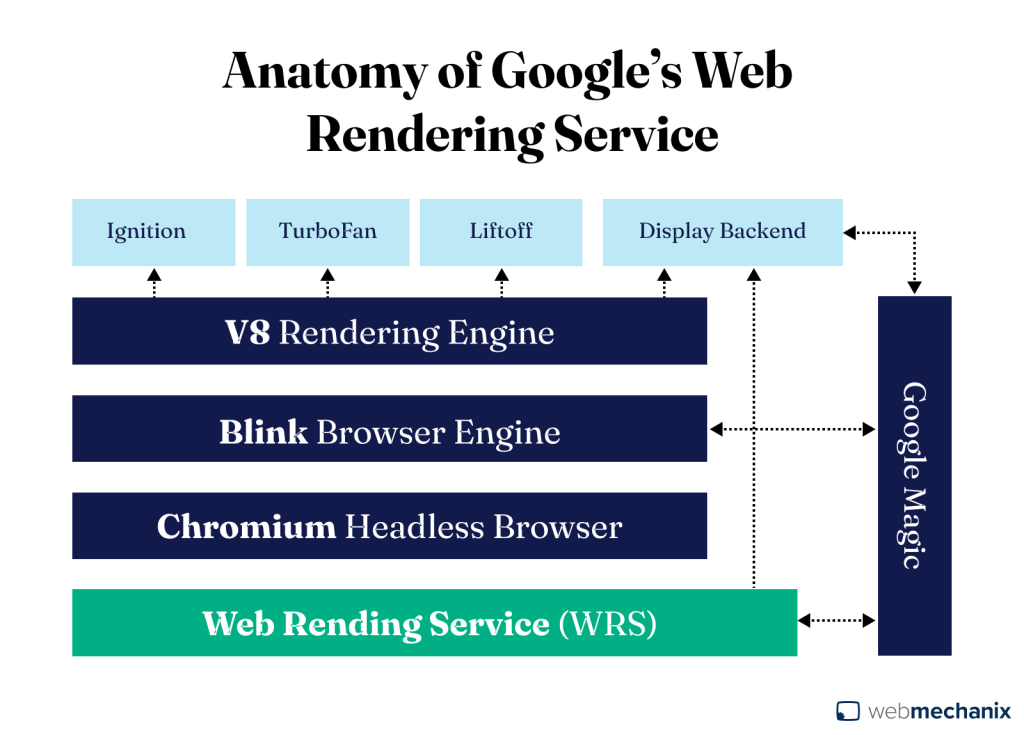

Optimizing Web Rendering

Rendering transforms the skeleton of your website’s code into the vibrant, interactive experience encountered by users and scrutinized by search engines. This seamless conversion from raw HTML to a dynamic webpage is not just technical alchemy; it’s a fundamental step that shapes your site’s SEO. How well this process is executed dictates the ease with which search engines can digest and evaluate your content, ultimately influencing your rankings in the digital ecosystem.

At its core, rendering is about transforming the initial HTML received from your server into the fully interactive page seen by users—known as the Document Object Model (DOM). This process involves executing JavaScript, applying CSS styles, and loading images.

Key Strategies for Effective Rendering:

- Server-Side Rendering (SSR) vs. Client-Side Rendering (CSR)

- SSR improves SEO by delivering fully rendered pages from the server, making content immediately accessible to search engines and users.

- CSR, while dynamic, relies on the browser to render content, which can delay search engines’ access to your content.

- Optimize JavaScript Execution:

- Utilize frameworks like Next.js, Nuxt.js, or Angular Universal for SSR, reducing the initial load time and making your content more indexable.

- Minimize client-side JavaScript to essential functions, reducing the load on browsers and speeding up content visibility.

- Enhance Page Loading with Modern Web Technologies:

- Implement lazy loading for non-critical resources, ensuring that important content loads first without waiting for everything else.

- Prioritize critical CSS and defer the rest, streamlining the rendering path for faster access to content.

Optimal rendering is crucial for search visibility and user experience. Regularly evaluating your site’s rendering ensures it’s accessible and correctly indexed. Here are streamlined methods to assess rendering health:

- Google Search Console’s URL Inspection Tool: Use this to view how Googlebot sees your page, comparing the crawled page’s HTML to what’s actually rendered in browsers.

- View Rendered Source vs. Initial HTML: Tools like Chrome’s View Rendered Source extension can highlight differences, helping identify potential rendering issues affecting SEO.

- Performance Analysis: Use Lighthouse to identify performance bottlenecks that could be impacting rendering and, by extension, SEO.

While JavaScript enhances interactivity and user experience, it introduces complexities in rendering that can impact SEO. Embracing SSR, optimizing resource loading, and ensuring content is accessible in both the page’s initial and rendered states are crucial steps.

By acknowledging rendering’s nuances and implementing these strategies, you can significantly improve your site’s Tech SEO, making your content more discoverable and rank-worthy.

Log File Analysis

Log file analysis stands as a crucial yet often overlooked component of Tech SEO. It goes beyond mere identification of 404 errors or tracking Google’s crawl frequency; it’s about gaining a deep understanding of how search engine bots, especially Googlebot, navigate and prioritize your content. This analysis can uncover hidden insights into your site’s structural efficacy and content quality from the perspective of search engines.

Why Log File Analysis Matters:

- Unparalleled Insights: It offers a detailed view of bot interactions, revealing crawl anomalies, inefficiencies, and overlooked content.

- Quality Visualization: By analyzing crawl patterns, you can infer the “predicted quality” Google assigns to your pages, a factor crucial for ranking.

- Optimization Guide: Identifying how bots crawl and what they prioritize allows you to fine-tune your site’s architecture and content strategy.

Log file analysis is a critical exercise for any Tech SEO strategy, offering direct insights into how search engines interact with your website. This process involves two key stages: accessing your site’s log files to gather the raw data and then deeply analyzing this data to understand search engine behavior and optimize your site’s SEO performance.

Here’s how to conduct a log file analysis:

- Accessing Log Files:

- Start by retrieving your website’s log files, which are typically accessible via an FTP client like FileZilla. These files are often located in standard directories, such as /var/log/access_log for Apache servers.

- Address common obstacles like disabled logging, managing large log file sizes, or integrating data from multiple servers by working closely with your development team or server administrators.

- Analyzing the Data:

- Leverage specialized tools, such as Semrush’s Log File Analyzer, to conduct a thorough analysis with ease. These tools can highlight essential metrics like Googlebot’s daily activity on your site, the distribution of HTTP status codes encountered, and the types of files being requested.

- Focus on sorting the analyzed data by “Crawl Frequency” to evaluate how Google allocates its crawl budget across your site, identifying which pages are prioritized and uncovering potential areas for optimization.

By methodically following these steps, you can perform a comprehensive log file analysis, uncovering valuable insights that can inform targeted improvements to your site’s Tech SEO infrastructure.

Now that you know how to effectively navigate a log file analysis, here are some practical steps for improvement:

- Visual Comparison: Use Google Search Console’s URL Inspection Tool and browser developer tools to compare the crawled and rendered HTML, ensuring Googlebot’s view aligns with user experience.

- Server vs. Client Rendering: Understand the implications of Server-Side Rendering (SSR) and Client-Side Rendering (CSR) on your site’s crawlability and indexability. SSR generally offers better SEO advantages by providing fully rendered content to search engines upfront.

- Crawlability Optimization: Regular audits with tools like Site Audit can pinpoint crawlability issues, guiding you to make necessary adjustments for better search engine accessibility.

Leveraging log file analysis equips you with the data needed to enhance your site’s technical foundation, ensuring that search engines can efficiently crawl, index, and ultimately rank your content. By understanding and acting on the insights derived from log files, you can direct search engine attention to priority content, streamline crawl paths, and resolve technical barriers.

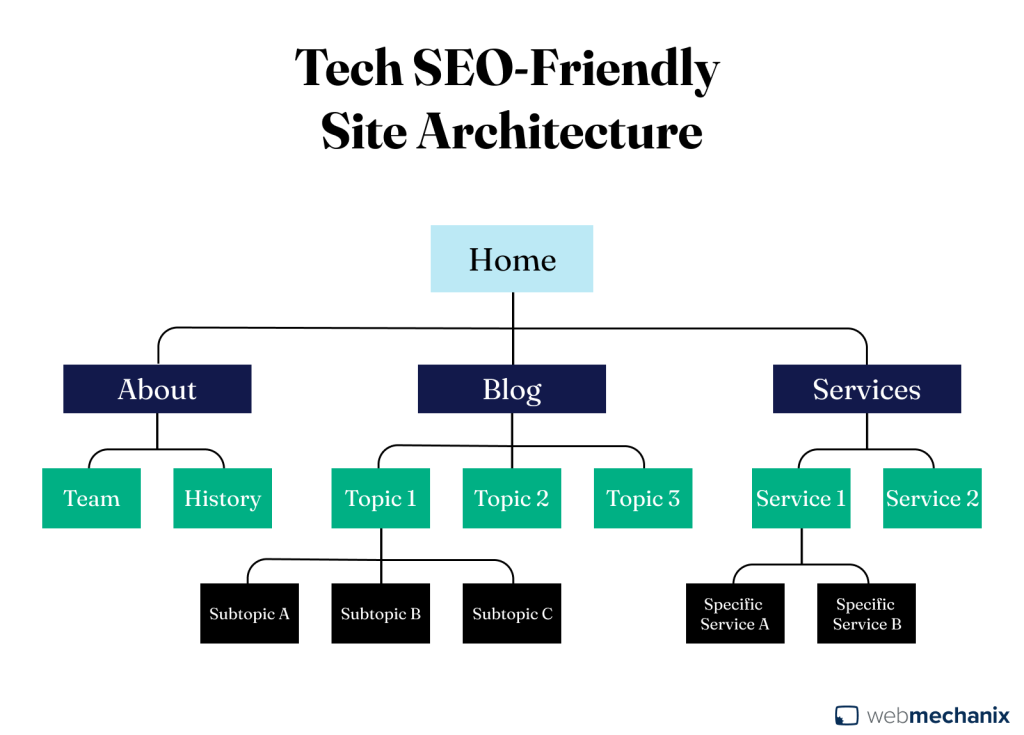

Site Depth

Site depth refers to the number of clicks required to reach a page from the homepage. It’s a crucial factor that affects both how users interact with your site and how search engines assess and index your content. A well-structured site with carefully managed depth ensures that valuable content is easily accessible.

Understanding Site Depth:

- User Experience: A shallow site depth improves navigation, making it easier for visitors to find what they’re looking for without frustration.

- Search Engine Crawling: Search bots have a limited budget for crawling each site. Pages closer to the homepage are more likely to be crawled and indexed regularly.

Strategies to Optimize Site Depth:

- Review Your Site Architecture:

- Conduct a thorough audit of your site’s structure. Use tools like website crawlers to visualize the architecture and identify pages that are nested too deeply.

- Simplify Navigation:

- Organize content into clear, logical categories and subcategories. Aim for a hierarchy that places the most important pages within two to three clicks from the homepage.

- Implement breadcrumb navigation to help users and search engines understand and navigate your site structure efficiently.

- Enhance Internal Linking:

- Boost the visibility of deep-seated pages by increasing internal links from higher-level pages. Focus on contextual linking where it adds value to the user’s journey.

- Use a sitemap and ensure it’s updated and submitted to search engines, aiding in the discovery and indexing of pages regardless of their depth.

- Limit the Use of Deep Nested Pages:

- Reevaluate the necessity of having content buried deep within the site. Consolidate pages or bring key content closer to the surface whenever possible.

- Monitor and Adjust Based on Analytics:

- Keep an eye on page performance metrics. Pages with high bounce rates or low traffic might be suffering from being too deep. Use this data to continually refine your site’s structure.

By addressing site depth with these targeted strategies, you ensure that your content is structured in a way that benefits users and search engines alike.

Navigating Algorithm Changes

The digital landscape is in a state of constant evolution, shaped significantly by search engine algorithm updates. These updates can dramatically influence your site’s visibility and ranking.

While not exclusive to Tech SEO, it’s noteworthy that a majority of Google Core Updates have a profound impact on Tech SEO aspects. Being agile and well-informed allows you to adapt your SEO strategies effectively, safeguarding your site’s position in search rankings and capitalizing on new opportunities for growth.

Adapting to Algorithm Changes:

- Continuous Learning: Stay abreast of official announcements from search engines and reputable SEO news sources to understand the latest changes and their implications.

- SEO Strategy Reassessment: Regularly review and adjust your SEO tactics to align with the current algorithm guidelines, ensuring your site remains compliant and competitive.

Recent Notable Google Updates:

- Local Ranking Algorithm Shift: A potential update on January 4th saw a 5.92 Flux score on BrightLocal’s Local RankFlux, indicating significant local ranking changes. Action: Monitor local rankings closely.

- Rich Snippets Clarification: Google states page content, not structured data or meta descriptions, primarily generates SERP snippets. Action: Focus on creating engaging, relevant webpage content.

- FAQ and HowTo Snippets Vanish: After a brief return, FAQ and HowTo rich snippets disappeared again without official word from Google. Action: Keep FAQ content updated and monitor GSC for changes in Search Appearance.

- Crawl Rate Limiter Removed: Google has phased out the Crawl Rate Limiter, citing improved crawling logic. Action: No immediate action is needed; the removal is part of Google’s enhancements.

- Schema Markup v24.0: Introduces new subtypes for Physicians and supports the suggestedAge property, refining healthcare-related search results. Action: Update Schema implementations as relevant.

- Mobile-First Indexing Completion: After nearly seven years, Google announces the full rollout of mobile-first indexing. Action: Ensure your site is fully mobile-friendly, addressing any mobile usability issues.

- Reduced Legacy Desktop Googlebot Crawling: With mobile-first indexing complete, Google will scale back the use of its legacy desktop crawler. Action: Prioritize mobile site optimization and monitor for any crawling changes.

These updates underscore the importance of staying agile in your SEO strategy, regularly reviewing and adapting to Google’s evolving landscape to maintain and enhance your site’s performance and visibility.

Implementing Changes for SEO Success:

- Audit Your Content and SEO Practices: In light of these updates, evaluate your site’s use of structured data, review management, and the visibility of promotional offers to ensure compliance and optimization.

- Engage with Your Audience: Consider how new features like direct discount codes in search results or sentiment emojis can be used to enhance your online presence and connect more effectively with users.

- Monitor Performance: Keep a close eye on how these changes impact your site’s performance. Use analytics to track shifts in traffic, engagement, and rankings, adjusting your strategy as needed.

By proactively responding to search engine algorithm updates with strategic adjustments, you can not only safeguard your site’s SEO standing but also capitalize on new opportunities to enhance visibility and engagement. Staying informed and flexible is key to navigating the ever-changing SEO landscape successfully.

Embracing these underutilized Tech SEO strategies aligns perfectly with the 80/20 rule, focusing your efforts on what truly matters and yields the most significant impact. This approach not only streamlines your optimization efforts but also sets your site apart in the competitive digital landscape, leveraging targeted actions for maximum effectiveness and distinguishing your SEO practices in a crowded field.

Identifying Lower-Impact Tech SEO Tasks

Applying the 80/20 rule within Tech SEO sharpens our focus, revealing that tasks vary widely in their ability to enhance your site’s SEO performance. By acknowledging which efforts yield minimal returns, SEO professionals can better allocate their resources.

Obsessing over meta keywords, excessively tweaking header tags, and prioritizing minor site speed enhancements often lead to significant resource expenditure with little to no impact. This understanding is crucial in guiding SEO practitioners to focus their efforts on the high-impact tasks that align with the 80/20 rule, streamlining their strategy to emphasize actions that genuinely drive meaningful improvements.

Here’s a deeper dive into some of the tasks with diminished impact:

- Marginal Site Speed Improvements: Although site speed is a critical factor for user experience and SEO, obsessing over minor improvements—especially when the site already performs adequately—can lead to diminishing returns. The focus should instead be on noticeable performance bottlenecks that directly impact user satisfaction.

- Excessive Focus on XML Sitemap Updates: While having an up-to-date XML sitemap is important, obsessively updating it for minor content changes won’t significantly boost SEO if your site is already being regularly crawled and indexed.

- Micro-optimizations in URL Structure: While clear and descriptive URLs are beneficial, minor tweaks in URL wording or structure have diminishing SEO returns, especially if they lead to frequent changes that can confuse search engines and users.

- Obsession with Link Disavowal: The practice of disavowing backlinks is intended for extreme cases of spammy or toxic link profiles. Regular use of the disavow tool for minor or doubtful links can be unnecessary and time-consuming, with little positive impact on most sites’ SEO performance.

- Unnecessary Redirect Chains: While redirects are sometimes necessary, creating complex chains of redirects in an attempt to sculpt page authority or fix minor issues can actually dilute signal passing and slow down site performance, rather than providing any tangible SEO benefit.

- Excessive Internal Linking with Exact Match Anchor Text: Over-optimization of internal linking using exact match anchor text can appear manipulative to search engines and may not provide the user experience or ranking boost anticipated.

- Misconceptions in the SEO Community: Some tasks persist in SEO discussions based on outdated information or misconceptions about their current impact. Staying updated with the latest search engine guidelines and empirical studies helps debunk myths and realign focus toward more impactful strategies.

- Obsessing Over URL Length: While clear and descriptive URLs are beneficial, excessively worrying about the length of URLs has a minimal impact on SEO. A well-structured, readable URL is more important than its length.

- Over-Reliance on Geo-Targeting for Global Sites: Geo-targeting can be helpful, but over-reliance on it without considering global content appeal and relevance might not yield the expected SEO boost for international audiences.

- Excessive Image Metadata Optimization: Some believe that meticulously optimizing metadata (such as EXIF data) in images can significantly impact SEO. In reality, the SEO benefits are minimal compared to ensuring images are properly compressed, load quickly and are relevant to the content.

- Prioritizing Image Format Conversion Over Content Quality: Converting images to the latest formats (like WebP) can improve loading times, but focusing too much on image formats rather than the quality and relevance of the visual content can misplace effort. User engagement with high-quality, relevant images outweighs the slight SEO boost from format optimization.

Avoiding these lower-impact activities and concentrating on high-value strategies allows SEO experts to boost their site’s performance more effectively. This approach adapts seamlessly to the dynamic nature of search engine algorithms

Maximizing Tech SEO with Strategic Tool Use

Tech SEO tools are indispensable for diagnosing and enhancing your website’s backend and overall SEO health. While the landscape of these tools is vast and varied, understanding how to leverage them effectively can transform your SEO strategy from good to exceptional.

Here’s how to navigate this landscape, ensuring your efforts are focused and impactful, in line with the 80/20 rule for efficiency and effectiveness.

Equipping SEO professionals with the insights needed to focus their efforts on impactful improvements, these tools are instrumental in pinpointing and rectifying technical issues that hinder a website’s SEO performance: Strategic Application of SEO Tools.

- Efficient Problem Identification: Use tools to quickly uncover issues such as crawl errors, slow page speed, duplicate content, and improper redirects that could undermine your SEO efforts.

- In-Depth Site Audits: Platforms like Screaming Frog and Semrush excel in offering comprehensive site audits, highlighting areas for improvement from site architecture to on-page SEO elements.

- Insights from Google: Leverage Google’s suite, including Google Search Console and Google Analytics, for direct insights on indexing, performance metrics, and Tech SEO issues, straight from the search engine itself.

Key Tools for Tech SEO Mastery

Navigating the technical aspects of SEO requires a robust toolkit. Below, we highlight essential tools that span the spectrum of Tech SEO needs, from site audits to in-depth analysis, catering to various expertise levels and business sizes.

- Screaming Frog: Ideal for crawling websites to identify SEO problems, with a free version suitable for smaller sites.

- Semrush: Offers extensive features for SEO, PPC, and content marketing, making it perfect for large-scale analyses.

- Google Search Console: Essential for understanding how Google views your site, providing data on indexing, organic performance, and technical issues.

- Ahrefs: Helps with identifying technical and on-page SEO issues, offering detailed categorization and reporting.

- Sitechecker and SE Ranking: User-friendly and affordable options for newcomers and small businesses, offering comprehensive SEO audits and ongoing monitoring.

- Lumar (DeepCrawl), OnCrawl, and Ryte: Advanced tools for in-depth analysis, focusing on everything from page speed and content quality to holistic SEO strategy development.

- Moz Pro: An all-in-one solution for SEO professionals, facilitating workflow with its wide range of technical SEO checks.

Each tool offers unique capabilities to diagnose, analyze, and optimize your website, ensuring it meets the highest standards of search engine optimization.

Optimizing Tool Use

To maximize the impact of your SEO efforts, it’s crucial to not just use tools but to use them wisely. Here’s how to optimize the use of SEO tools to refine key aspects of your website’s performance and visibility:

- Prioritize Content Quality: Tools reveal that content quality and relevance are paramount; structured data and meta descriptions enhance, but do not replace substantive page content.

- Monitor Mobile Usability: With tools like Google’s Mobile-Friendly Testing Tool and PageSpeed Insights, ensure your site caters to mobile users, reflecting Google’s mobile-first indexing completion.

- Structured Data Testing: Use Google’s Rich Results Testing Tool for validating schema markup, crucial for enhancing content visibility in search results.

By selecting and utilizing the right mix of Tech SEO tools, you can diagnose issues more accurately, streamline your optimization process, and ultimately, achieve better rankings and user engagement.

Remember, the power of these tools lies not just in their sophisticated analyses but in how you apply these insights to make data-driven optimizations that resonate with both search engines and users.

Tech SEO and NLP in SGE

The integration of Natural Language Processing (NLP) and Generative AI into search engines represents a significant transformation in SEO strategy. These technological advancements have empowered search engines to comprehend and interpret human language with unparalleled depth, thereby paving new paths for the optimization of digital content.

- Natural Language Processing (NLP): NLP is a branch of artificial intelligence that focuses on the interaction between computers and humans through natural language. It enables machines to understand, interpret, and generate human language in a way that is both meaningful and useful.

- Generative AI: Generative AI refers to algorithms capable of creating content, whether text, images, or videos, that mimic human-like creativity and expression. In the context of search engines, Generative AI helps generate responses that closely align with user queries, providing more accurate and contextually relevant search results.

- Search Generative Experience (SGE): SGE is Google’s innovative approach to integrating Generative AI into its search ecosystem, aiming to revolutionize how search queries are interpreted and answered. By leveraging advanced NLP and AI technologies, SGE allows Google to provide search results that are not only highly relevant but also conversational and contextually rich. This initiative underscores Google’s commitment to enhancing the search experience, making it more intuitive and aligned with natural human interaction.

Learn how leveraging NLP in Tech SEO can position your content at the forefront of the Generative AI revolution in search engines, giving you a competitive edge in achieving higher relevance, authority, and visibility as we step into a transformative era dominated by AI advancements.

Strategic Insights into NLP and Generative AI:

- Beyond Keywords to Conversational Queries: Modern search engines utilize NLP to decode the semantic meaning of searches, moving from keyword matching to understanding user intent. This evolution demands content that answers questions as naturally as they are asked, incorporating long-tail phrases and addressing user queries directly.

- The Role of Entities and Context: Entities have become crucial in how search engines interpret content. Utilizing named entity recognition, content creators can structure their content around identifiable entities and topics, enhancing its discoverability and relevance.

- Embracing Generative AI: With tools like BERT and the introduction of Generative AI models, search engines can now comprehend the context and nuances of language, offering results that closely match the user’s search intent. Incorporating insights from these models into your SEO strategy can significantly improve content alignment with user expectations.

As we navigate towards a click-less future powered by advancements in Google’s Search Generative Experience (SGE), the strategy for optimizing content must evolve. This new landscape demands a focus on leveraging technology and content quality in ways that align with how AI interprets and presents information: Optimizing for a Click-Less Future with SGE:

- Leverage Structured Data for Clarity: Utilize structured data markup to clearly define entities within your content, helping search engines easily categorize and display your information in rich results.

- Prioritize Content Depth and Quality: In an era where generative AI can produce content rapidly, distinguishing your brand through in-depth analysis, unique insights, and high-quality information becomes paramount.

- Focus on EEAT: Emphasize Experience, Expertise, Authoritativeness, and Trustworthiness in your content. Highlight real-world experiences, expert opinions, and authoritative sources to stand out in a crowded digital landscape.

As SGE and Generative AI begin to redefine the norms within SERPs, applying the 80/20 rule underscores the importance of preparing your website for this shift. Focusing 20% of your efforts on aligning with SGE principles can yield 80% of your future SEO success.

As the search landscape continues to evolve, staying informed and adaptable will be key to leveraging these technologies for SEO success. By focusing on creating genuinely valuable content and utilizing the latest in AI and NLP, brands can ensure they not only meet the current standards but are also well-prepared for future developments in search technology.

Streamlining Tech SEO Strategies for Maximum Impact

As we’ve navigated through the intricate layers of Tech SEO, from its foundational elements to the cutting-edge integration of NLP and Generative AI, it’s evident that not all SEO tasks are created equal. Emphasizing the 80/20 rule allows us to channel our focus toward those strategies that offer the most substantial impact on our website’s performance and visibility in this AI-driven era of search engines.

To thrive in the dynamic digital landscape, it’s important to prioritize those tasks that align with the core principles of Tech SEO, ensuring our sites are not only technically optimized but also prepared to leverage the capabilities of Generative AI and SGE. By concentrating on the vital 20%—from enhancing crawlability and indexability to embracing structured data and accessibility—we position ourselves to capture 80% of the potential benefits, setting a strong foundation for SEO success.

The journey through Tech SEO is a continuous one, with the landscape ever-evolving as search engines become more sophisticated. Staying informed, adaptable, and focused on impactful strategies will be crucial in navigating these changes. Let’s embrace the 80/20 rule, optimizing our efforts for efficiency and effectiveness, and ensure our SEO practices not only meet the current standards but are also well-prepared for future advancements in search technology.

Want to learn more about how WebMechanix can help with your SEO? Let’s talk.

Most newsletters suck...

So while we technically have to call this a daily newsletter so people know what it is, it's anything but.

You won't find any 'industry standards' or 'guru best practices' here - only the real stuff that actually moves the needle.